Building a Neural Network FrameWork from Scratch with Python

Published on September 3, 2024

This project involves creating a neural network Framework from scratch using NumPy and Matplotlib. The primary goal was to build a customizable and extendable Framework that allows users to train and test neural networks on a variety of datasets. The Framework implements key components like activation functions, forward and backward propagation algorithms, and model compilation and training processes.

Introduction

Creating a neural network Framework from scratch is both a challenging and rewarding task. Through this project, I aimed to deepen my understanding of the inner workings of neural networks, going beyond the abstractions provided by popular libraries like TensorFlow and PyTorch. This Framework development journey highlights the challenges faced, key components of the implementation, and some example applications.

Introduction to the Project

The goal of this project was to create a fully functional neural network Framework using just NumPy and Matplotlib. By implementing everything from basic activation functions to forward and backpropagation algorithms, I aimed to build a customizable and extendable Framework for training and testing neural networks on a variety of datasets.

Key Features of the Library

- Custom Layer and Activation Functions: The library allows for the creation of layers with different activation functions like Sigmoid, ReLU, Tanh, and Linear. These functions are implemented with both their forward pass (function) and backward pass (derivative) to support backpropagation.

- Model Compilation and Training: Once the network layers are defined, the model can be compiled with a specified learning rate. The training process supports metrics like accuracy and includes the ability to validate against separate validation data.

- Backpropagation Implementation: The backpropagation algorithm adjusts the weights and biases of each layer based on the computed error, determining the network’s ability to learn from data following the stochastic gradient descent method.

- Support for Various Datasets: The Framework can be tested with various datasets, including classification and regression problems, validating the correctness and performance of the implementation.

Breaking Down the Code

The project consists of several key classes and functions, each responsible for different components of the neural network.

Activation Functions

The activation functions (Sigmoid, ReLU, Tanh, and Linear) were implemented to control the outputs of neurons. For example, the Sigmoid activation is used to squash outputs to a range between 0 and 1, which is particularly useful for binary classification problems.

Layers and MLP Model

The Layer class defines the structure of each layer, including the initialization of weights and biases. The MLP class handles the construction of the neural network by allowing users to add layers and compile the model. The MLP model serves as the foundational structure and user interface for defining the network’s topology. The model can then be compiled and trained using the MLP_compiled class, which supports the forward pass, backpropagation, and optimization using gradient descent.

Training and Evaluation

The training process involves feeding the data through the network, computing the loss, and updating the weights via backpropagation. This section of the code is crucial as it dictates how well the network will learn from the data.

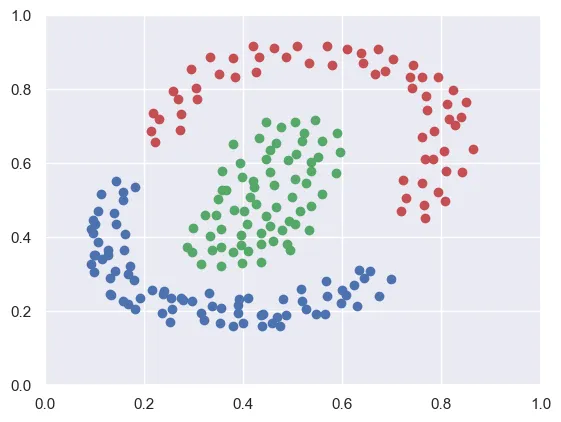

Testing the Framework with Datasets

After building the Framework, I conducted several tests using well-known datasets as well as artificial ones. These tests allowed me to evaluate the library’s performance in real-world scenarios and fine-tune the implementation. Datasets tested include artificial points classification and the Iris dataset, demonstrating the Framework’s capability to model non-linear relationships and handle well-known datasets.

Challenges and Learning Outcomes

Developing this library from scratch presented several challenges, especially in areas like debugging backpropagation and ensuring numerical stability during training. Overcoming these challenges deepened my understanding of how neural networks work under the hood. A significant takeaway was the importance of efficient matrix operations, which are crucial for neural networks and can lead to substantial performance gains.

Conclusion and Next Steps

This project was a valuable learning experience, and I’m excited to continue refining and expanding the library. Future improvements could include adding support for more complex architectures (like CNNs or RNNs), implementing more advanced optimization techniques, and providing better visualization tools for model performance.

If you’re interested in neural networks and want to understand how they work at a low level, I encourage you to explore this project further. It’s a fantastic way to gain a deeper appreciation for the complexities of machine learning.