NOAA Web Analysis

Published on November 5, 2024

Project Overview

In an effort to enhance my skills in distributed deployment and web APIs, I embarked on a comprehensive project that simulates the requirements of a large-scale distributed system. The project involves developing a distributed ETL (Extract, Transform, Load) pipeline integrated with an efficient relational database and connected to an API for delivering data visualizations and weather predictions. The entire system is orchestrated using Kubernetes and Docker, leveraging technologies like PySpark, TimescaleDB, and FastAPI to handle over 30 GB of NOAA's climatic data.

Objectives

- Scalability: Design a system capable of handling increasing data volumes without performance degradation.

- Efficiency: Optimize data processing times using distributed computing with Apache Spark.

- Reliability: Ensure high availability and fault tolerance through Kubernetes orchestration.

- Usability: Provide intuitive interfaces for data exploration and analysis via FastAPI.

- Predictive Analytics: Incorporate machine learning models to forecast future trends based on historical data.

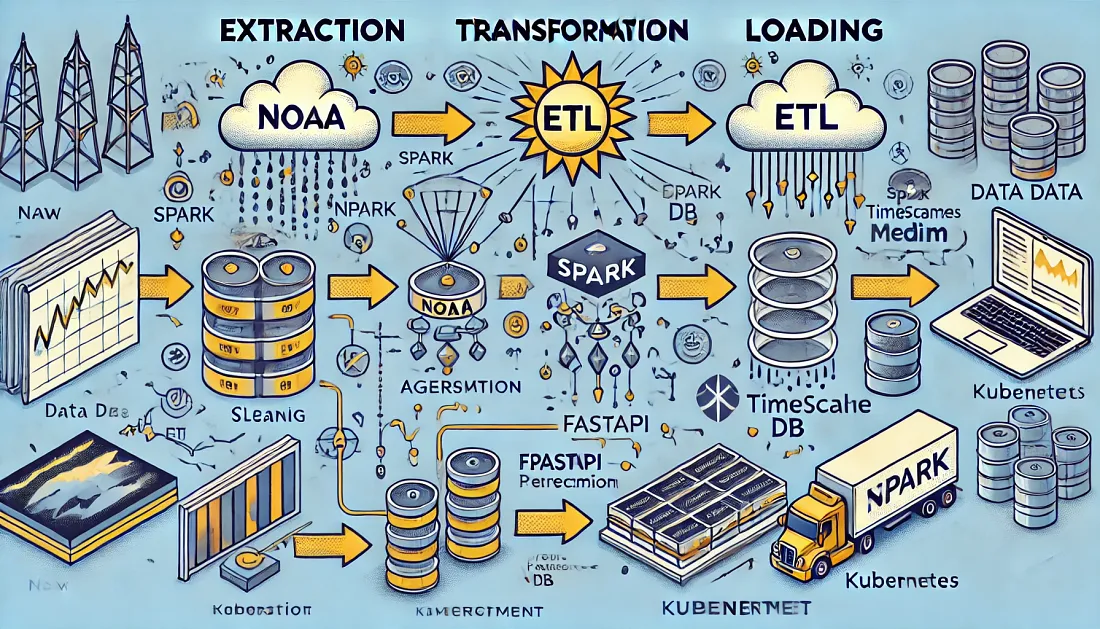

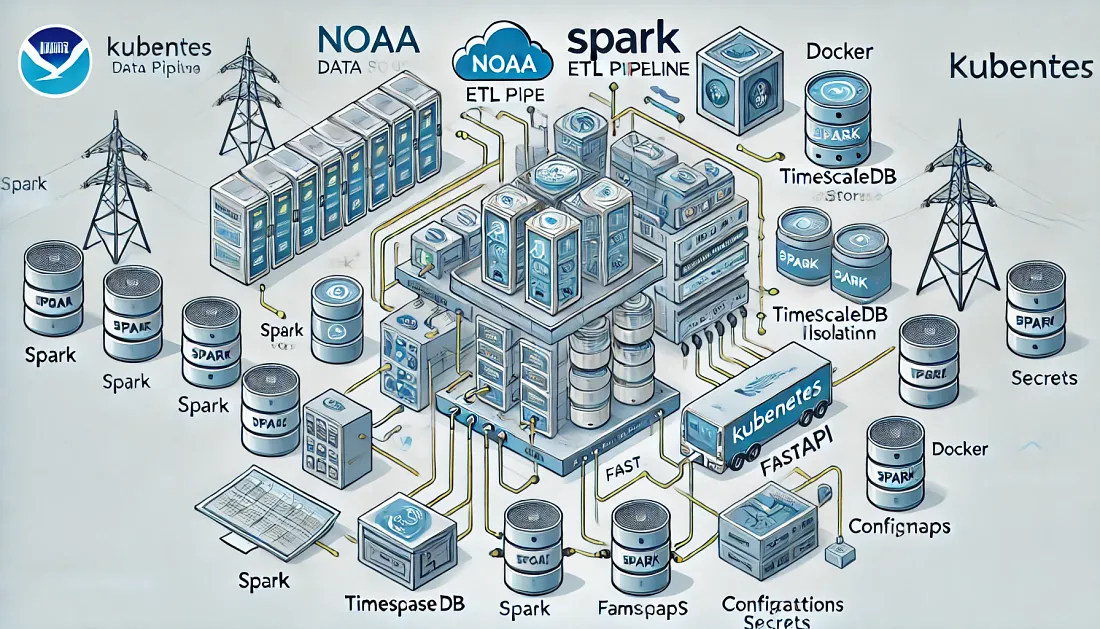

Architecture

The project's architecture is meticulously crafted to emulate a distributed computing environment. The key components include:

- Data Source: NOAA's Global Historical Climatology Network-Daily (GHCN-Daily), comprising over 30 GB of daily climatic records.

- ETL Pipeline: A Spark cluster deployed on Kubernetes processes the massive datasets efficiently using PySpark.

- Data Storage: Transformed data is stored in TimescaleDB, an optimized relational database for time-series data, running within a Docker container.

- API and Visualization: A FastAPI application provides interactive dashboards and visualizations for data exploration.

- Predictive Analytics: An independent machine learning model container connects to TimescaleDB to perform daily predictions, with results accessible via the FastAPI API.

- Orchestration and Monitoring: Kubernetes orchestrates all containers, managing deployment, scaling, and monitoring to ensure seamless operation.

Article Summaries

1. Project Overview and Objectives

The initial article introduces the project's motivation, goals, and the importance of handling large-scale data efficiently. It outlines the architecture and emphasizes the integration of various technologies to achieve scalability, efficiency, reliability, and usability. The article sets the foundation by explaining the choice of tools like Docker for containerization and Kubernetes for orchestration, highlighting their roles in ensuring consistency, isolation, portability, automated deployment, scaling, and high availability.

2. Kubernetes Architecture and Deployment

The second article delves into the Kubernetes infrastructure that forms the backbone of the project. It covers the setup of namespaces, ConfigMaps, and Secrets to manage configurations and sensitive information securely. The article explains how Kubernetes orchestrates the deployment of various components, such as the Spark cluster, TimescaleDB, and FastAPI application. Key points include:

- Namespace Definition: Creating a dedicated namespace (

noaa-analytics) to isolate project resources. - ConfigMaps and Secrets: Managing environment variables and sensitive data securely using Kubernetes ConfigMaps and Secrets.

- Deployment Automation: Utilizing scripts to automate the application of configurations, ensuring consistent and error-free deployments.

- Scalability and Integration: Leveraging Kubernetes to scale components independently and maintain high availability.

3. ETL Pipeline Implementation

The third article focuses on the ETL pipeline, the core of the data processing workflow. It details how over 31 GB of raw climatic data from NOAA are transformed into structured information ready for predictive analytics and interactive visualizations. Key aspects include:

- Apache Spark Utilization: Using PySpark on a Spark cluster to handle massive datasets efficiently with distributed computing.

- Custom ETL Functions: Developing a library of utility functions to standardize and modularize extraction, transformation, and loading operations.

- Data Transformation Process: Parsing fixed-width text files, handling missing values, unpivoting data, formatting dates, and cleaning data for storage.

- Integration with TimescaleDB: Loading transformed data into TimescaleDB, optimized for time-series data and geospatial queries via PostGIS extensions.

- Docker and Kubernetes Configuration: Containerizing the ETL pipeline with Docker and orchestrating it with Kubernetes for scalability, reliability, and efficient resource management.

- Benefits Highlighted: Emphasizing efficiency in data processing, scalability, code modularity, seamless database integration, geospatial data handling, and automation.

Conclusion and Future Work

The project demonstrates the integration of multiple technologies to create a scalable, efficient, and reliable system for processing and analyzing large-scale climatic data. By combining Apache Spark, TimescaleDB, FastAPI, Docker, and Kubernetes, the project addresses key challenges in big data processing and provides a solid foundation for further enhancements.

Future work includes:

- Advanced Analytics: Incorporating sophisticated machine learning models for predictive analytics.

- Real-Time Data Processing: Enhancing the ETL pipeline to handle streaming data for real-time analytics.

- Security Enhancements: Implementing advanced security measures to protect data integrity and privacy.

- User Management: Developing role-based access controls within the API application.

- Monitoring and Logging: Integrating tools like Grafana for real-time system monitoring and visualization.